In today’s rapidly evolving tech landscape, Generative AI (GenAI) initiatives are not just experimental; they are emerging as critical components of enterprise strategies, with real budgets allocated and a few trailblazing use cases already in production. The potential of these initiatives is inspiring confidence in the security and legal teams, who were once hesitant but are now aligning with these initiatives. This shift is driven by the rise of privacy-focused open-source alternatives like DBRX, Llama 3.1, and Mistral Large. However, despite these advancements, Gartner predicts that by the end of 2025, at least 30% of GenAI projects will be abandoned post-proof of concept due to challenges such as poor data quality, insufficient risk controls, escalating costs, and ambiguous business value. While open-source solutions have made it easier to build proof of concepts, moving to production requires careful consideration of scaling, performance, security, quality monitoring, and cost management. These complexities have prompted a more cautious approach to ensure that GenAI deployments meet stringent enterprise standards and deliver sustainable value.

Optimizing Deployments with Compound AI Systems and Generative AI Foundations

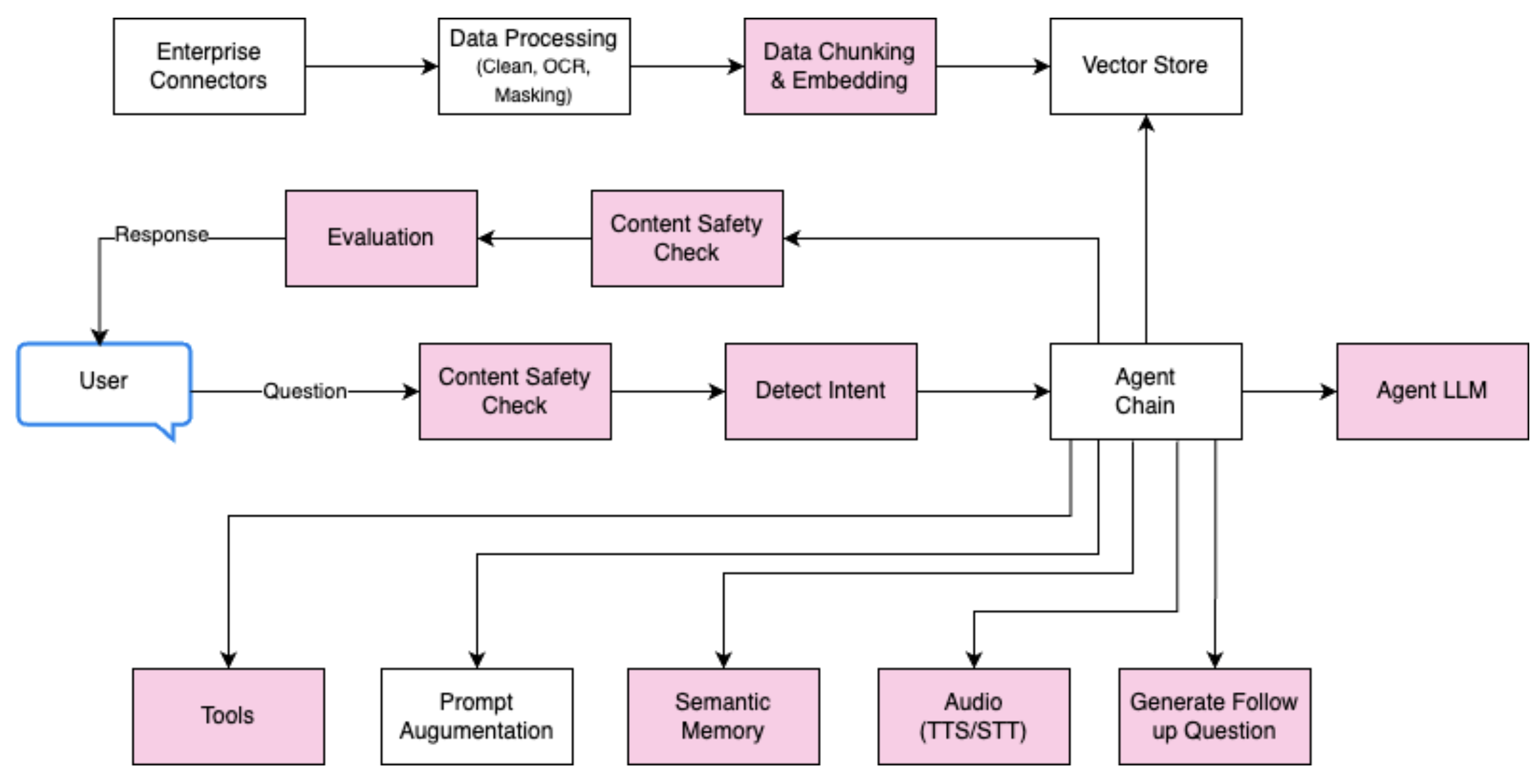

According to a thought leadership article by Berkeley Research, Compound AI Systems achieve state-of-the-art results by integrating multiple specialized components rather than relying on monolithic models alone. These systems are increasingly adopted by enterprises to leverage the best-of-breed capabilities tailored to specific use case requirements. A typical production-grade Agentic AI system involves multiple stages, each powered by purpose-built large language models (LLMs) to generate optimal responses. The diagram below illustrates a typical compound AI system, incorporating both an Ingestion pipeline and an Agentic Query pipeline, with several LLM calls executed before the response is delivered to the user. This approach allows for various optimizations, such as incorporating smaller open-source language models to enhance system performance while optimizing for cost and latency. Often, critical capabilities essential for production deployment are overlooked during proof-of-concept stages. Consequently, enterprises facing the challenge of scaling multiple Generative AI use cases—typically ranging from 50 to 300—tend to delay their first production deployment or cannot expand projects beyond initial implementations. Thus, enterprises devote their valuable internal resources to establishing a Generative AI Foundation that streamlines the deployment of safe, high-quality applications.

Building Scalable, Cloud-Agnostic GenAI Foundation with Databricks and Karini AI

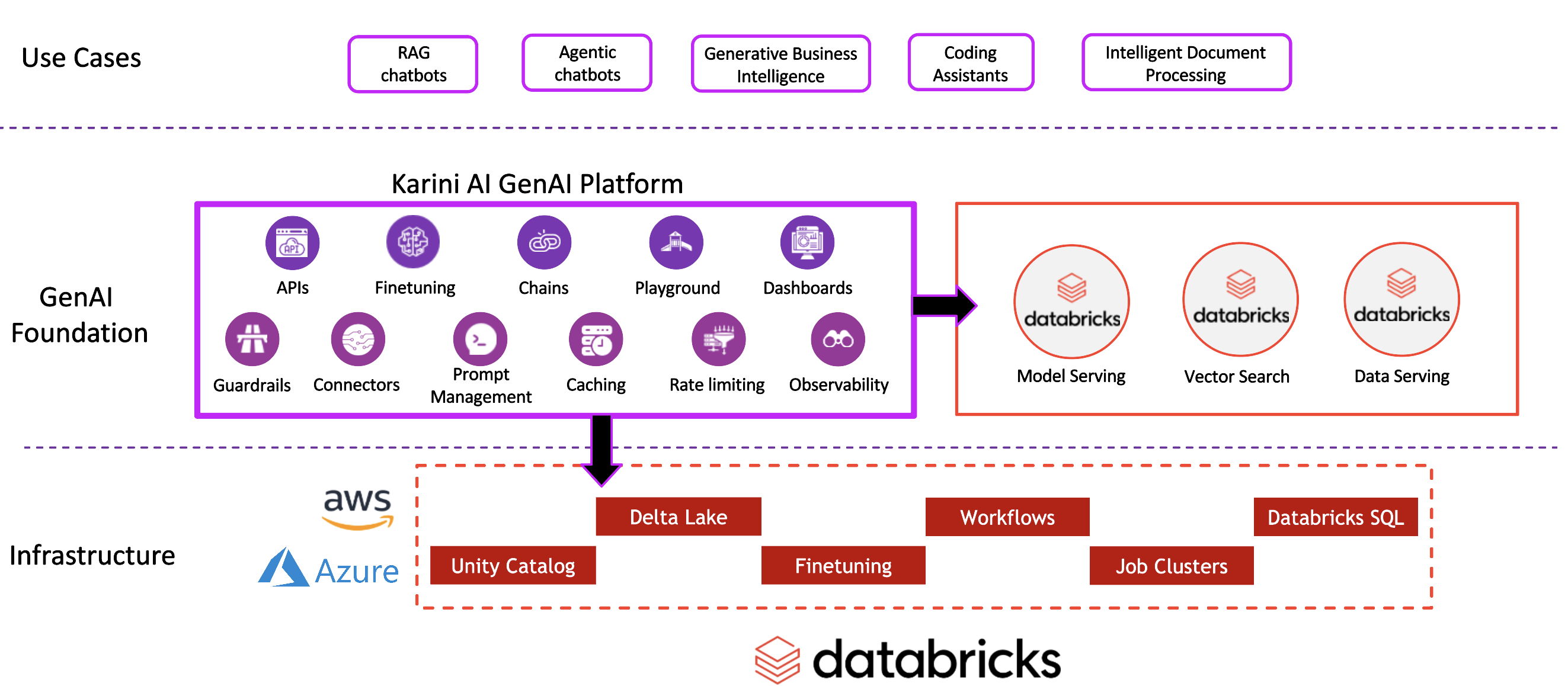

The GenAI foundation is a robust platform that equips enterprises with the tools to develop, manage, and monitor secure, high-quality, Generative AI applications. This platform is scalable, adaptable, and cloud-agnostic, ensuring it can evolve with the changing landscape of AI. It creates LLM-ready data and infrastructure, providing access to cutting-edge open-source LLMs and third-party model hubs. Databricks, a comprehensive, unified platform, offers specialized GenAI products for data preparation, managed vector store, optimized LLM model serving, and fine-tuning. Karini AI, a tool that seamlessly works with the Databricks Data Intelligence Platform, enables enterprises to build and deploy Generative AI applications in under 30 minutes, accelerating production deployment by 20x compared to traditional methods.

Karini AI’s no-code recipes allow practitioners to swiftly build Generative AI applications by assembling a data ingestion pipeline. Whether connecting to enterprise data sources or leveraging existing data registered in Unity Catalog volumes, users can easily configure the settings to preprocess and chunk source data into LLM-ready formats, targeting Databricks vector store indexes. Once the pipeline is set up, users can execute the ingestion securely within their workspace and observe real-time updates on the progress of knowledge base generation.

Enterprises can use Karini AI’s prompt playground to create an Agent, beginning with a prompt template and registering relevant tools such as Databricks knowledge base, web services, messaging, custom functions, or Databricks SQL. The agentic prompt can be tested across multiple models to compare response quality and select the best-performing model. These prompt experiments can be saved in Karini AI’s prompt store for tracking and version control, allowing users to quickly revert to previous prompt versions.

Once the prompt is finalized, it can be deployed within a no-code recipe with output nodes. These output nodes offer optional settings for enabling intent detection, guardrails, follow-up questions, and online evaluation. The resulting chatbot can then be configured by exporting the recipe, allowing for styling and feedback options to be customized. Karini AI automatically maintains traces of the entire chain, which is invaluable for red-teaming efforts before releasing applications to production. Additionally, Karini AI keeps a record of historical conversations along with user feedback. These conversations can be exported to create fine-tuning datasets compatible with Databricks LLM fine-tuning, enabling continuous improvement of the AI models.

Karini AI also offers built-in cost and performance dashboards, enabling teams to track application-level spending and monitor performance metrics for continuous optimization.

Unlocking Innovation with Karini AI and Databricks: A Powerful Partnership

Karini AI powered by Databricks Mosaic AI offers ready-to-use foundational capabilities to accelerate innovation. To summarize, here are the benefits for enterprises,

Accelerate Time to Market: Karini AI enables beginners and seasoned professionals to rapidly prototype and deploy generative AI applications. With the ability to move from concept to production 20x faster, enterprises can innovate swiftly without needing deep expertise in GenAI or Databricks.

Eliminate Technical Debt: By leveraging standardized, optimized blueprints, Karini AI helps enterprises build, manage, and scale GenAI applications across numerous projects. This approach reduces reliance on specialized talent and custom code, minimizing technical debt and ensuring sustainable growth. The platform's efficiency ensures enterprises can focus on innovation rather than being bogged down by technical issues.

Future-Proof Your GenAI Investments: Karini AI's GenAI foundation on Databricks stays at the forefront of AI advancements, allowing seamless migration to cutting-edge models and techniques. Whether your use cases involve RAG, Agents, Multi-Agent systems, or Generative BI, Karini AI ensures that your applications remain state-of-the-art.

Cloud Agnostic Flexibility: The platform's integration with Databricks Model Serving ensures optimized deployment of the latest open-source models while offering secure access to third-party model hubs like Azure OpenAI, Amazon Bedrock, and Google Vertex AI. This cloud-agnostic approach provides unparalleled flexibility and scalability.

Enterprise-Grade Features: The combined platform offers robust enterprise features, including Guardrails for safety, semantic caching for cost efficiency, prompt management and tuning, and comprehensive evaluation metrics and dashboards. These tools give enterprises the control and insights to manage and optimize GenAI applications effectively.

Conclusion

Generative AI is transforming industries, driving innovation, and reshaping business functions at an unprecedented pace. Enterprises must invest in a robust GenAI foundation and scalable platform to fully harness this potential. Karini AI, powered by Databricks Mosaic AI, offers a comprehensive solution that eliminates inefficiencies, accelerates innovation, and reduces the total cost of ownership—all without vendor lock-in. By adopting this powerful combination, businesses can enhance their competitive edge and stay at the forefront of the AI-driven future.