Introduction

Generative AI has become a shared C-Level priority with many enterprises setting goals in their annual statement and numerous press releases. As Generative AI is gaining traction, there is much anticipation around their evolving model performance capabilities. However, as developers increasingly move beyond Generative AI pilots, the trend is shifting to compound systems. The SOTA results often come from compound systems incorporating multiple components rather than relying solely on standalone models. A recent study by MIT Research has observed that 60% of LLM deployments in businesses incorporate some form of retrieval-augmented generation (RAG), with 30% utilizing multi-step chains or compound systems.

Rise of Compound Systems

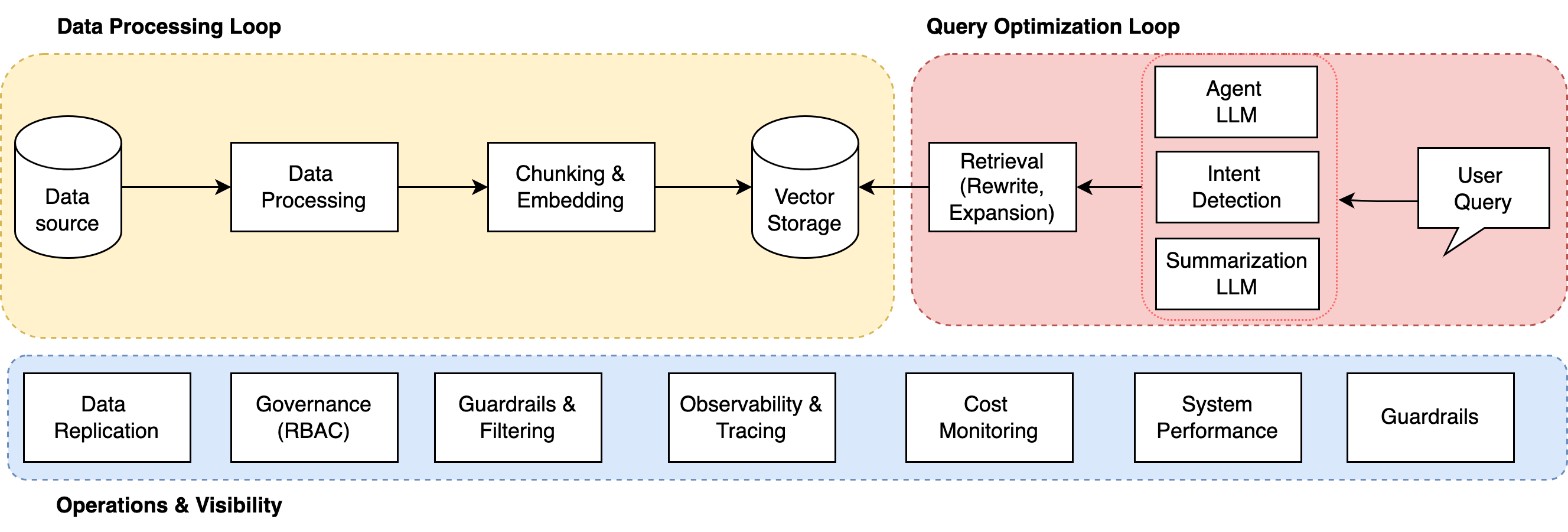

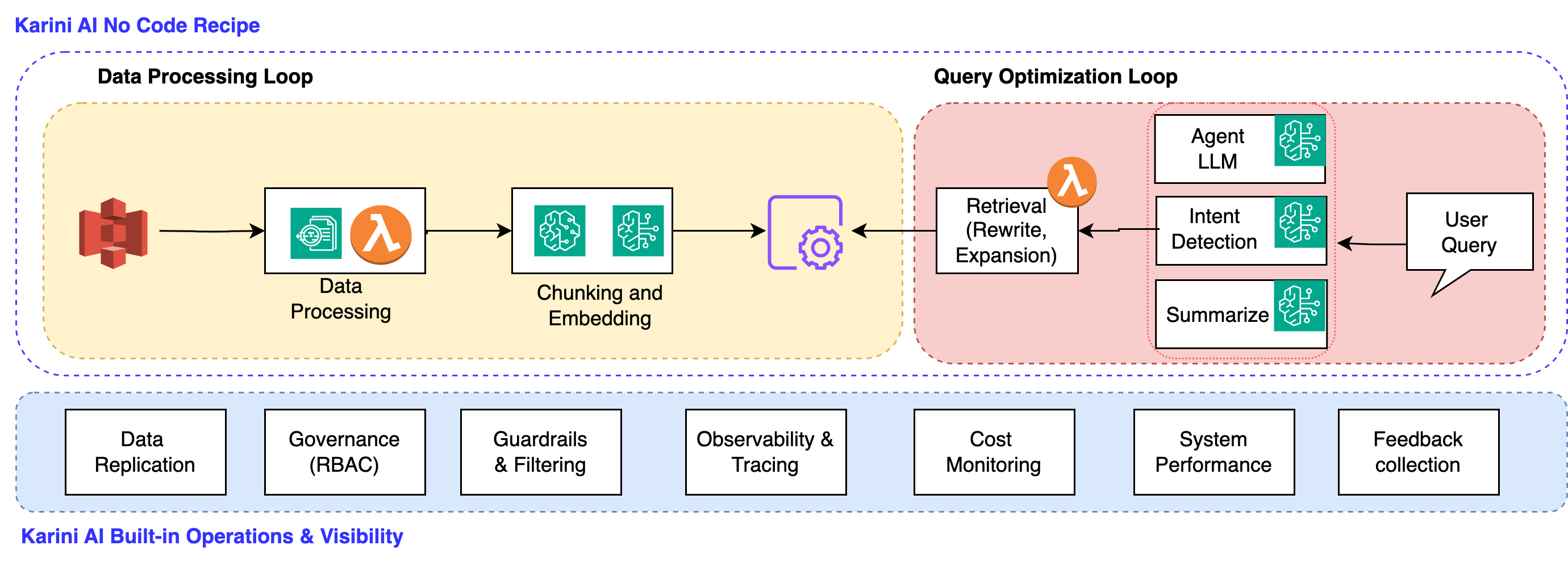

A Compound AI System addresses AI tasks through multiple interconnected components, including several calls to different models, retrievers, or external tools. AI models are constantly improving, with scalability seemingly limitless. However, complex, multifaceted compound systems increasingly achieve the most advanced results. Combining the models with other components allows businesses to build dynamic systems that can address complex scenarios based on user queries at runtime, reduce model hallucinations, and increase user control and trust. Enterprises can design their compound systems based on their performance goals. E.g., in some applications, even the largest model may need to be more performant or too expensive. Still, an ensemble of smaller fine-tuned models augmented with optimized search and retrieve capabilities can give the best results. Github Copilot is an excellent example of this approach. While enterprises are making a shift in compounding AI systems, the emerging challenges are how to design, optimize & operate these systems. The compound systems consist of a data processing loop, query optimization loop, and operations management capabilities, and they can be independently optimized for better performance.

Karini AI Platform powered by AWS Gen AI for Compound AI Systems

AWS provides a broad set of Gen AI managed services such as Amazon Bedrock, Amazon SageMaker, and OpenSearch to build scalable generative AI applications. Amazon Bedrock is the most trusted and scalable fully managed service that offers a choice of high-performing foundation models from leading AI model providers and Amazon via a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Karini AI is a no-code Generative AI platform with a broad set of capabilities to build Compound AI systems purposefully built using AWS services to speed up production-grade application development. AWS customers can use best-of-breed capabilities to build production-grade RAG in a matter of minutes.

Data Processing Loop: Karini AI utilizes Amazon Textract and proprietary technologies to create LLM-ready data and provides built-in chunking algorithms. Customers can choose Amazon Bedrock hosted models or custom models hosted via Amazon SageMaker for chunking. Amazon OpenSearch delivers a secure and scalable vector store.

Query Optimization Loop: Karini AI employs the easy-to-use Prompt Playground to author, test, and compare the model performance of Bedrock-hosted models or custom models using Amazon SageMaker. Enterprises can leverage one of the many built-in chains, such as Q&A, summarization, classification, or Agentic workflows. Multiple ways are available to optimize retrieval using techniques such as query rewrite, query expansion, and context generation. Customers can also customize LLM-driven responses for greetings and follow-up questions.

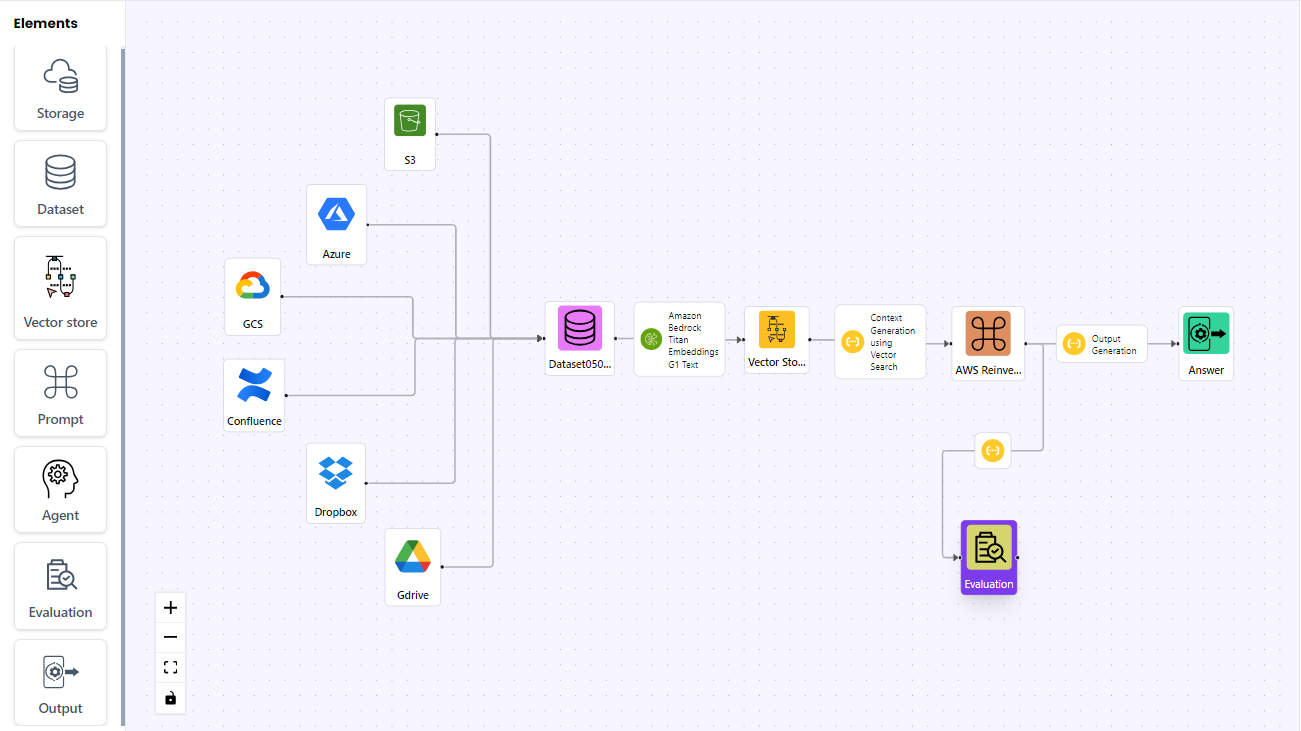

Operations and Visibility: Karini AI provides built-in observability for tracing RAG chains and understanding low-performing conversations. Copilot supports fine-grained feedback collection to gather user preferences and create instruction fine-tuning datasets. The built-in dashboards provide system performance and cost monitoring across model endpoints for Amazon Bedrock and SageMaker-hosted models. Karini AI provides enterprise connectors for significant numbers of data sources such as Amazon S3, Websites, Google Storage, Azure Storage, and Dropbox to unify data silos into a single vector store and also respects source system role-based access controls during serving.

Here is a quick end-to-end Karini AI Generative AI recipe powered by Amazon Bedrock models.

Conclusion

Compound AI systems mark a significant advancement in AI technology by integrating various components to solve complex challenges that were once out of reach for traditional AI models. These systems are highly flexible, allowing for tailored responses and greater control over outputs. Karini AI’s advanced platform, coupled with Amazon Bedrock, enables the creation of sophisticated compound AI systems for any use case. By adopting these systems, businesses can enhance innovation, increase the quality and reliability of their AI solutions, and build stronger trust with their customers.